DataScience & Analytics

Data Science and Analysis is a powerful and versatile domain used to derive insights, make data-driven decisions, and solve complex problems. It consists of key methodologies such as Data Extraction, Data Preparation, Data Modeling, and Visualization, all of which work seamlessly together. At Tetraskills, we specialize in training future-ready professionals to master the skills and tools of Data Science and Analysis and excel in creating impactful solutions for modern businesses.

Enroll Today – Classes Begin: Soon

Registration Open

Price : 14000/- INR

200 hr +

Programme

600 +

Students Enrolled

Offline Batch

In our Learning Center

Online Batch

Connect from any where

Internship

Opportunities

About DataScience & Analytics Course

Data Science and Analysis is a powerful and versatile domain used to derive insights, make data-driven decisions, and solve complex problems. It consists of key methodologies such as Data Extraction, Data Preparation, Data Modeling, and Visualization, all of which work seamlessly together. At Tetraskills, we specialize in training future-ready professionals to master the skills and tools of Data Science and Analysis and excel in creating impactful solutions for modern businesses.

Industry-Relevant Skills:

Master the tools and technologies that are in demand across leading industries.

Hands-On Experience:

Work on real-world projects to gain practical knowledge and confidence.

Expert Guidance:

Learn from experienced professionals who provide insights and mentorship.

Certification:

Industry renowned internship & course completion certification

Career Opportunities:

Enhance your employability with skills that stand out in the competitive job market.

Comprehensive Learning:

Gain a deep understanding of both foundational and advanced concepts.

Personal Growth:

Develop problem-solving, communication, and teamwork skills essential for success.

Career Transition:

More than 1200 learners upgraded their careers in the last 12 months

Course Offerings

Diverse Project Portfolio

Practice Exercises

Doubt Clearing Sessions

Dedicated Buddy Support

Industry Oriented Curriculum

Industry Recognized Certificate

Q&A Forum

Instructor Led Sessions

Peer to Peer Networking

Email Support

Mock Interviews

Module Level Assignments

Become a Tools Expert

Course Structure

Fundamentals

Key Areas Covered

Introduction to Data Science & Analytics

- Understanding the role of Data Science in modern business decision-making.

- Key differences between Data Science, Data Analytics, and Data Engineering.

- Real-world use cases across industries.

Data Types and Formats

- Structured, Semi-Structured, and Unstructured Data.

- Common data formats: CSV, JSON, Excel, SQL databases.

The Data Science Lifecycle

- Data Collection: Gathering data from various sources (databases, APIs, web scraping).

- Data Cleaning: Removing inconsistencies, handling missing data, and ensuring quality.

- Data Analysis: Identifying trends and patterns.

- Data Visualization: Presenting insights through graphs, charts, and dashboards.

- Interpretation and Communication of Results.

Tools for Data Science and Analytics

- Programming Languages: Python, R.

- Data Manipulation Libraries: Pandas, NumPy.

- Visualization Libraries: Matplotlib, Seaborn, Tableau.

- SQL for database querying and manipulation.

Introduction to Statistics

- Basic statistical measures (mean, median, mode, standard deviation).

- Probability basics and its application in data analysis.

Problem-Solving with Data

- Formulating data-driven questions.

- Exploratory Data Analysis (EDA) to uncover insights.

Ethics in Data Science

- Understanding bias in data.

- Privacy and confidentiality in handling datasets.

Basics

Introduction to Python Programming

Learn the basics of Python, including syntax, code structure, and running Python programs.Variables, Data Types, and Operators

Understand how to store, manipulate, and use data with variables, data types (strings, integers, etc.), and operators.Control Structures (if-else, loops)

Master decision-making and repetition in Python using conditional statements and loops.Functions and Modules

Explore reusable blocks of code with functions and how to use/import modules for added functionality.

Core

Introduction to Python Libraries: NumPy, Pandas, and Matplotlib

Get familiar with essential libraries for data manipulation, analysis, and visualization.Data Manipulation using Pandas

Learn to clean, filter, aggregate, and transform datasets efficiently using Pandas.Working with Arrays using NumPy

Perform numerical computations and work with multi-dimensional arrays using NumPy.File Handling and Exception Handling

Understand how to read/write files and handle errors gracefully during execution.

Intermediate

Exploratory Data Analysis (EDA) Techniques

Analyze datasets to discover patterns, trends, and insights using Python tools.Data Visualization with Seaborn and Matplotlib

Create stunning and informative charts and graphs to present your data.APIs and JSON Data Processing

Learn to interact with APIs to retrieve data and process JSON data for analysis.Basic Statistics using Python

Apply statistical methods like mean, median, mode, variance, and more using Python.

Advanced

Advanced Data Wrangling

Dive deep into reshaping, merging, and cleaning complex datasets for analysis.Database Integration with Python (SQLAlchemy, SQLite)

Connect Python to databases, perform queries, and integrate SQL for data storage and retrieval.Automation and Script Development

Build scripts to automate repetitive tasks and improve efficiency in data workflows.Real-World Applications and Case Studies

Apply all learned skills to real-world scenarios and work on hands-on projects to solidify knowledge.

Basics

Introduction to Statistics

Learn the foundation of statistics, including its role in data analysis and decision-making.Measures of Central Tendency (Mean, Median, Mode)

Understand how to summarize data using measures that represent the center of a dataset.Measures of Dispersion (Variance, Standard Deviation)

Explore how to measure data spread and variability within datasets.

Core

Probability Concepts and Distributions

Study the basics of probability, probability distributions (normal, binomial, etc.), and their applications.Hypothesis Testing

Learn to formulate and test hypotheses to draw conclusions about population parameters.Correlation and Regression Analysis

Understand relationships between variables and predict outcomes using linear regression models.

Intermediate

Sampling Methods and Techniques

Explore different sampling techniques and their importance in statistical analysis.Analysis of Variance (ANOVA)

Learn how to compare means across multiple groups to identify significant differences.Time Series Analysis

Study data that varies over time, identify trends, and make forecasts using statistical methods.

Advanced

Multivariate Statistics

Analyze datasets with multiple variables and understand techniques like PCA, cluster analysis, and factor analysis.Bayesian Statistics

Learn probabilistic inference using Bayes’ theorem and apply Bayesian approaches to statistical modeling.Statistical Model Validation

Ensure the accuracy and reliability of statistical models through validation techniques like cross-validation and residual analysis.

Basic Topics

Introduction to Data Visualization

Understand the importance of data visualization in data analysis and decision-making.Fundamentals of Visualizing Data

Learn key principles of effective data visualization, including clarity, simplicity, and accuracy.Creating Basic Plots (Bar, Line, Scatter)

Start creating simple yet powerful visualizations such as bar charts, line graphs, and scatter plots.

Core Concepts

Data Visualization with Matplotlib

Master Python’s foundational visualization library to create static and dynamic plots.Advanced Visualizations with Seaborn

Explore advanced statistical visualizations such as box plots, violin plots, and pair plots.Dashboard Creation with Tableau

Learn to build interactive dashboards and visual storytelling using Tableau.

Intermediate Topics

Interactive Visualizations using Plotly

Create interactive and engaging charts such as 3D plots, animated graphs, and more using Plotly.Storytelling with Data Visualizations

Learn to design visualizations that effectively communicate insights and narratives.Heatmaps, Pair Plots, and Other Advanced Charts

Dive into advanced visualizations like heatmaps and multi-variable pair plots for deeper insights.

Advanced Topics

Building Data Dashboards

Create comprehensive and interactive dashboards for real-time data monitoring and reporting.Best Practices for Effective Visualization

Learn design principles to ensure your visualizations are clear, impactful, and professional.Real-World Visualization Projects

Apply your skills to real-world projects, integrating multiple visualization tools and techniques.

Basic Topics

Introduction to Machine Learning

Learn the core concepts of Machine Learning, its applications, and its role in modern technology.Types of Machine Learning (Supervised, Unsupervised, Reinforcement)

Understand the three main types of machine learning and when to apply each.Preparing Data for Machine Learning

Learn data preprocessing techniques, including cleaning, transforming, and splitting data for model training.

Core Concepts

Linear Regression and Logistic Regression

Explore regression techniques for predicting continuous and categorical outcomes.Clustering Techniques (K-Means, Hierarchical)

Learn how to group data points into clusters based on their similarities.Feature Engineering and Scaling

Master techniques to extract meaningful features and scale data for optimal model performance.

Intermediate Topics

Decision Trees and Random Forests

Understand tree-based algorithms for classification and regression tasks.Model Evaluation and Metrics (Confusion Matrix, ROC Curve)

Learn to evaluate model performance using metrics like accuracy, precision, recall, and ROC-AUC.Dimensionality Reduction (PCA)

Simplify datasets while retaining essential information using Principal Component Analysis (PCA).

Advanced Topics

Introduction to Neural Networks

Understand the basics of neural networks, including structure and activation functions.Basics of Deep Learning

Learn foundational concepts of deep learning, including multi-layered networks and backpropagation.Building End-to-End Machine Learning Models

Gain hands-on experience building and deploying machine learning models for real-world applications.

Basic Topics

Introduction to Big Data

Understand the concept of Big Data, its characteristics (volume, velocity, variety), and its importance in modern analytics.Basics of Distributed Systems

Learn how distributed systems enable Big Data processing by dividing tasks across multiple nodes.Overview of Hadoop Ecosystem

Get familiar with the key components of Hadoop, including HDFS, MapReduce, and YARN.

Core Concepts

Working with HDFS

Learn to store, manage, and retrieve large datasets using the Hadoop Distributed File System.Basics of Apache Spark

Explore the fundamentals of Apache Spark, a fast and general-purpose engine for large-scale data processing.Data Processing with MapReduce

Understand the MapReduce programming model for processing large datasets in a distributed environment.

Intermediate Topics

Real-Time Processing with Spark Streaming

Learn how to process and analyze streaming data in real-time using Apache Spark.Hive and Pig for Big Data Analysis

Explore Hive for SQL-like querying and Pig for data transformation and analysis in the Hadoop ecosystem.Introduction to Cloud Platforms for Big Data (AWS, Azure, GCP)

Discover how cloud platforms offer scalable solutions for Big Data storage and processing.

Advanced Topics

Handling Large Datasets with Spark MLlib

Use Spark’s MLlib library for scalable machine learning on large datasets.Optimizing Big Data Pipelines

Learn techniques to improve the efficiency and reliability of data pipelines.Big Data Project Implementation

Apply Big Data tools and techniques to build and deploy end-to-end projects in real-world scenarios.

Introduction to Node.js

- Introduction to Node.js:

- Setup:

node -v and npm -v.- First Steps:

Understanding Core Concepts

- Modules:

fs, http, path).- File System:

- Creating a Simple Server:

http module to create a basic web server.

Deep Dive into Asynchronous Programming

- Callbacks:

- Promises:

- Async/Await:

Build Projects from Scratch

Customer Churn Prediction Project

The Customer Churn Prediction project is a data-driven application designed to predict whether a customer is likely to stop using a product or service. Built using Python and essential data science libraries, this project demonstrates the use of machine learning techniques to solve real-world business problems.

Key Features:

- Data Processing: Preprocesses raw customer data to clean, transform, and prepare it for model training.

- Exploratory Data Analysis (EDA): Identifies key patterns and trends in customer behavior that lead to churn.

- Machine Learning Model: Implements algorithms like Logistic Regression or Random Forest to predict churn probabilities.

- Visualization: Uses Matplotlib and Seaborn to create visual insights like feature importance and churn trends.

- Actionable Insights: Provides predictions to help businesses target at-risk customers and improve retention strategies.

This project is an excellent practice for applying machine learning, EDA, and data visualization techniques while solving a critical business challenge.

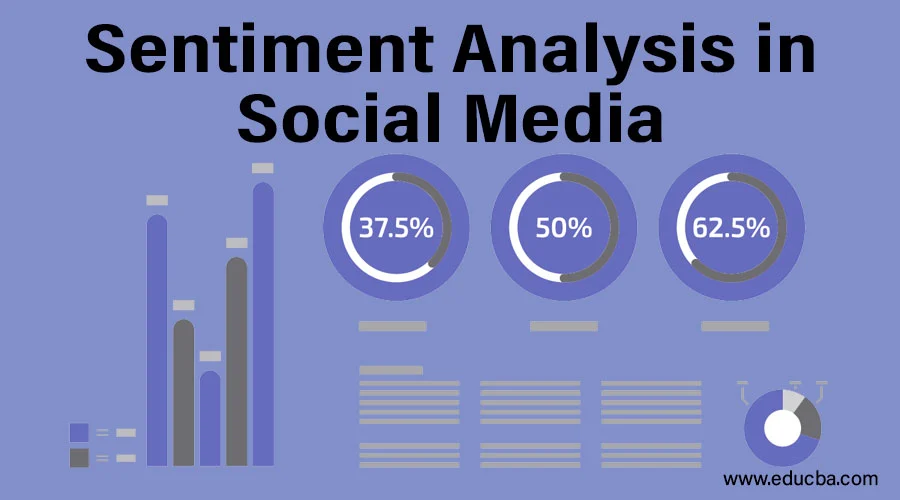

Sentiment Analysis of Social Media Data

The Sentiment Analysis of Social Media Data project is a natural language processing (NLP) application designed to analyze public sentiment on social media platforms like Twitter. Using Python and popular NLP libraries, this project identifies whether a post conveys positive, negative, or neutral emotions.

Key Features:

- Data Collection: Leverages APIs like Twitter API to collect real-time or historical social media posts.

- Data Preprocessing: Cleans and processes text by removing noise, such as hashtags, mentions, links, and stop words.

- Sentiment Classification: Uses machine learning models or pre-trained NLP tools like TextBlob or VADER to classify sentiment.

- Visualization: Generates visual reports such as pie charts and bar graphs to display sentiment distribution across the data.

- Insights for Decision Making: Helps businesses or organizations understand public perception about their brand, products, or campaigns.

This project is an excellent opportunity to practice NLP techniques, API integration, and data visualization, providing valuable insights for sentiment-driven decision-making.

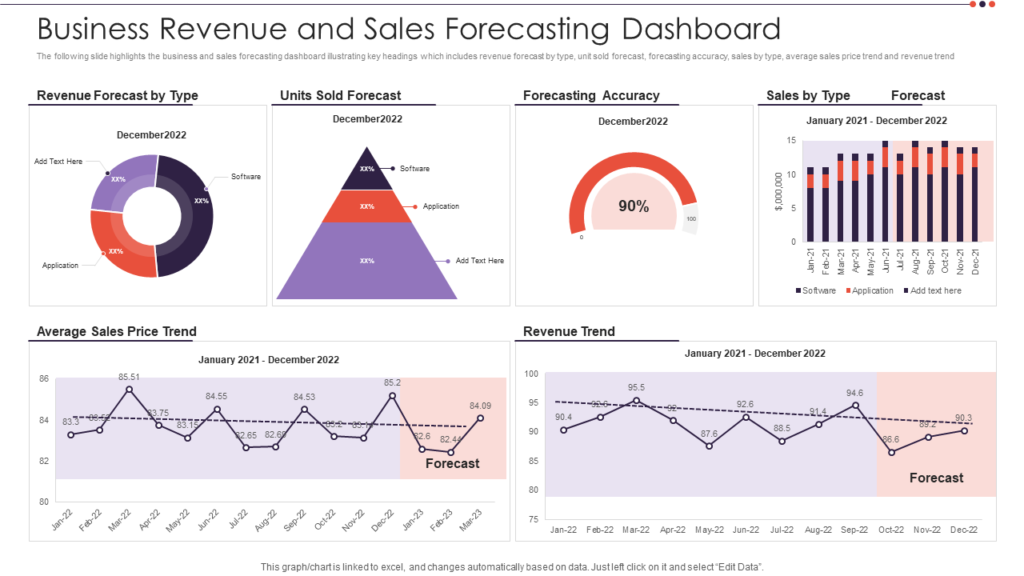

Sales Forecasting Dashboard

The Sales Forecasting Dashboard project is a comprehensive data analytics application designed to visualize historical sales trends and predict future sales using time series analysis. Built using Python, Pandas, and visualization tools, this project offers actionable insights for businesses to optimize inventory and revenue strategies.

Key Features:

- Data Analysis: Processes historical sales data to identify trends, seasonality, and anomalies.

- Time Series Forecasting: Implements forecasting models like ARIMA or Prophet to predict future sales accurately.

- Interactive Visualization: Creates dynamic charts and graphs using tools like Matplotlib, Plotly, or Tableau for clear and engaging dashboards.

- Customization: Allows users to filter and drill down into data by categories, regions, or time periods.

- Actionable Insights: Provides predictions to help businesses with demand planning, budgeting, and decision-making.

This project is an excellent practice for mastering time series analysis, forecasting models, and dashboard creation, helping businesses make data-driven decisions effectively.

Fraud Detection System

The Fraud Detection System project is a machine learning application designed to identify and prevent fraudulent transactions in financial systems. By analyzing patterns and anomalies in transaction data, this project helps businesses mitigate risks and protect their customers.

Key Features:

- Data Preprocessing: Cleans and prepares transaction data, handling imbalances using techniques like SMOTE (Synthetic Minority Over-sampling Technique).

- Anomaly Detection: Identifies unusual patterns using clustering methods like K-Means or distance-based algorithms.

- Machine Learning Models: Utilizes classification algorithms such as Logistic Regression, Random Forest, or Gradient Boosting to predict fraudulent activities.

- Visualization: Creates dashboards to visualize fraud trends, flagged transactions, and model performance metrics like precision and recall.

- Real-Time Monitoring: Enables integration with APIs to monitor transactions in real-time and raise alerts for suspicious activity.

This project is ideal for practicing data preprocessing, classification techniques, and anomaly detection, providing valuable skills for real-world risk management and financial analytics applications.

Meet your Mentors

Our Alumni's are in

Get Certified

Once you complete the course offline, complete the assignment and project, you will be able to get the Course certificate.

After Live project work for a minimum of 1 month, you will receive an Internship Certificate.

- Attendance must be 60% offline Training Sessions and Internship.

- After scoring at least 60% in the quiz & assignments.,

- After Completing 1 Project in Internship Period.

Student Reviews

Biswaranjan Nayak

Internship program is good and teaching skills of mentors are very good.

Omprakash Jena

The teaching skills are overally good but the mentoring skills can be improved. Office curriculum for interns should be better.

Roumya Prakash Sahoo

The Training + Internship program offered invaluable learning opportunities and practical experience, greatly enhancing my skill set and professional growth. However, the management faced challenges in efficiency, where better organization, improved communication, and streamlined coordination could have elevated the overall experience, making it more seamless and fulfilling.

Twinkel Sahani

The ‘Training + Internship Program’ was highly valuable, combining clear training sessions with practical projects. It enhanced my technical skills and provided real-world experience, allowing me to apply what I learned effectively.

Sambit Parida

I feel glad to get such a huge amount of knowledge and got to learn so many things which are new for me and give such a wonderful experience for my whole upcoming life. Thank you and fell pleasure to be the part of this company.

Saurav Kumar Behera

I am grateful for the incredible knowledge and new experiences I have gained, which will positively impact my future. Being part of this company has been a truly enriching journey, and I am thankful for the opportunity.

Satyajit Das

I’m very happy with your internship program and thankful to your team

Success Stories

Cracked First Job

Sourav Kumar Samantaray

2025 MCA

Continuing MERN Training + Internship Program in TetraSkills

Job - TCS

Cracked First Job

Sai Som Biswal

2025 B-Tech CSE

Continuing MERN Training + Internship Program in TteraSkills

Job - Tech Mahindra

Cracked First Job

Rudra Narayan Swain

MCA 2024

Completed Data Science Certification fromTetraSkills, Landed Internship in Data

Job - LTIMindtree, Pune

Cracked First Job

Ch. Monalisa Padhi

2024

Completed MERN Stack Web Development

Job -Total technology system, Bhubaneswar

Cracked First Job

Subhasmita Maharana

2024

Completed MERN Stack Web Development

Job - Pinnacle Consulting, Bhubaneswar

Cracked First Job

ASHUTOSH SAHOO

B-tech 2024

Completed MERN Stack Web Development

Job - SBI Headquarter, Bhubaneswar

Cracked First Job

Sweta Sipra Panda

B-tech 2024

Completed MERN Stack Web Development

Job - Marquee Semiconductor, DLF, BBSR

Cracked First Job

Susmita Dash

B-tech 2024

Completed MERN Stack Web

Development

Job - Marquee Semiconductor, DLF, BBSR

Cracked First Job

Anjali Kumai

2024 B-TECH

Completed Web developer and graphics design Internship in XLNC.io through TetraSkills Program

Job - Digit Insurance, BBSR

Cracked First Job

Subham Subhasis Sahoo

2024 MCA

Completed MERN Stack Web

Development

Job - Qualitas Global, PUNE

Cracked First Job

Jayadev Satapathy

2024 MCA

Completed Frontend Web

Development

Job - Oasys Tech Solutions Pvt Ltd., BBSR

Cracked First Job

Anand Kumar

2024 B-TECH

Completed MERN Stack Web Development, got stipend based Internship in TetraTrion

Job - MindFire Solutions, Bhubaneswar

TetraSkills FAQ

What is the refund policy?

TetraSkills operates a strict no-refunds policy for all purchased paid courses. Once a course purchase is confirmed, payment cannot be refunded. This policy applies to all courses, irrespective of the price, duration, or the learner’s progress within the course.

I need to enroll in a course. Whom should I contact?

To enroll in a course, you can:

- Fill out the form for the course you are interested in, and our team will connect with you over the phone.

- Alternatively, if you are in Bhubaneswar, you can visit our offline Learning Center directly for enrollment assistance.

Does TetraSkills provide job assistance and on-campus placement?

TetraSkills does not directly provide job assistance or on-campus placements. Instead, we focus on equipping students with the skills and experience needed to succeed in placements. Our offerings include:

- Mock interviews and group discussions.

- Practical skill-building exercises.

- Hands-on experience through internships with partner companies.

Through these programs, students become placement-ready and gain the confidence to excel in their career paths.